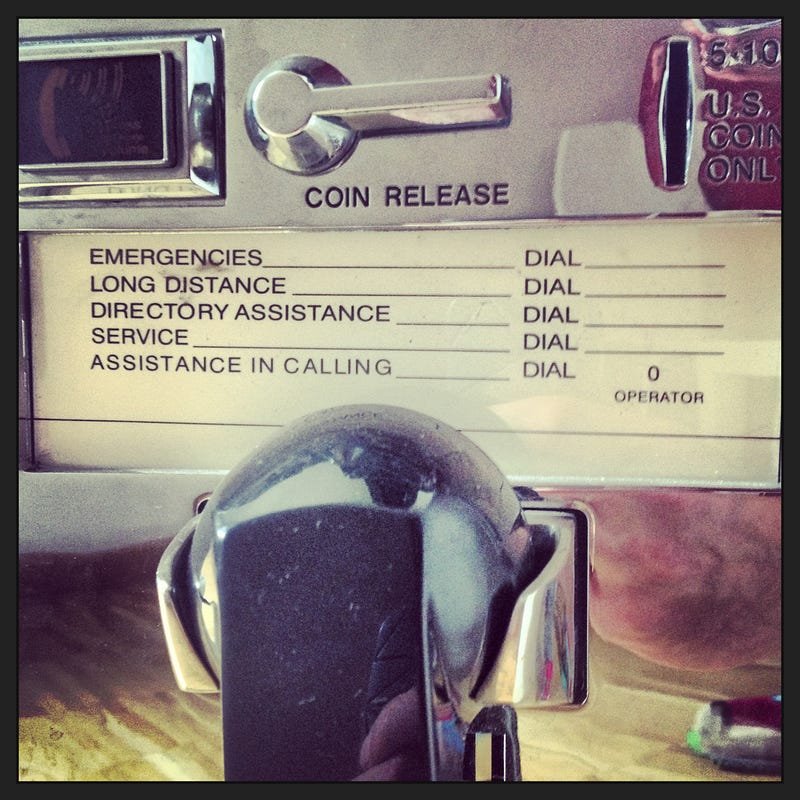

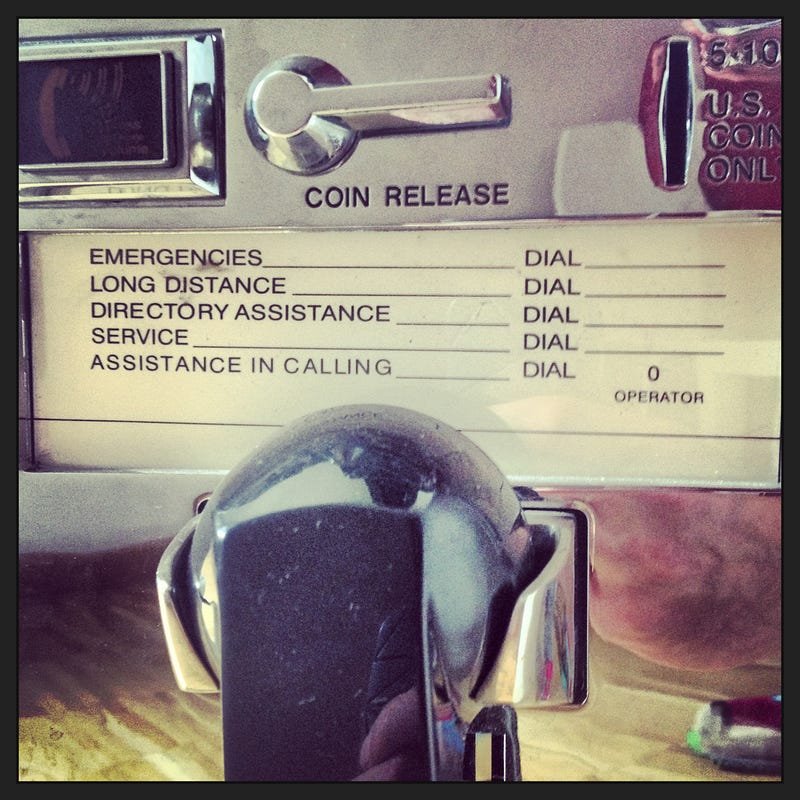

A long long time ago.. or rather Wed Aug 24 2011 19:55:54 GMT+0200 (CEST) I decided to write a blog post about the need to teach our computers some human language. Not as an academic project, that was already done with more or less success in the past. I am talking about computers that you, me and our moms use every day — smartphones, ATMs, washing machines, airport panels. I have started the blog post and realized that probably most of people could find the problem non-existent. We have just got used to the reality that computers are no good at ‘soft skills’. There are mountains of books on how to do computer input better, there’s a lot of knowledge how to do processing better, but the computer output seems to be the least questioned area which barely evolved since the Xerox PARC times.

We live in the future, at least as seen from the perspective of a 20th century science fiction writer, but strangely enough the output of our powerful thinking machines is not that different from the punch cards of the 1970s. Even the shiniest screens on the slickest devices most of the time just display nicely formatted database tables with some buttons on top. Look behind all the gradients, color schemes and beautiful typography, how much intelligence can you read there? Do you think your favorite gadget is ready for a Turing test? Is it even smarter than ELIZA from 1960s?

It’s not all hopeless though. Here’s the most basic example — already back in 2008 I have noticed some websites using the ‘pretty date’ plugin. It would take a date from a database such as 2008-01-28T20:24:17Z and generate a much more readable ‘3 days ago’ instead. You could argue about the actual value of that particular change, but it’s clear and feels much more ‘human’ than the robotic timestamp. Strangely, after the ‘pretty date’ there was no immediate new wave of User Interface humanization, even if the work didn’t have to start from scratch. Then in October 2011 came Siri, an invisible virtual assistant, who can understand and respond in human language. Even with its presence on millions of iPhones it seems that it stayed quite a novelty feature rather than the preferred user interface. The illegitimate sisters of Siri seem to enjoy even less popularity.

It seems that most of UI design effort went and is still going towards making the computer output more visually attractive, leaving our experiences sadly very one-sided. I understand that as most of the technological developments it’s a result of existing or rather nonexistent tools. I mean, we have Photoshop, Ruby on Rails, Keynote and tons other great and less great software tools that allow us to take data and make something nice looking and often even useable. But we don’t have a 4.99$ app in the App Store that can take a database, crunch it and produce a short but informative statement, comparable to your daily horoscope. If all you have is Photoshop, all you problems start to look like layouts.

I had a dream, I had a dream that one day I will be able to install an app on my phone, an ultimate news app of all time. I would open it in the morning and it would tell me ‘Good morning, Matas. Nothing important happened in the last 24 hours. Live your life.’ Is it a lot to ask? As it turns out, it is.

The giants of our digital world, such as Google and Apple are already investing their close-to-unlimited resources in teaching computers how to tell stories. These are still very limited, mostly one-liners that wouldn’t even cut it for a minor B-movie characters, as if the makers weren’t brave enough to talk in paragraphs. There’s also some exciting progress happening though, I was excited to see that a group of former Google employees created a product that e.g. can take a bunch of baseball stats and can produce a quite readable sports newspaper article.

Why do I want computers to talk or even to tell us stories? Well apart from the natural feeling that we have a missed opportunity to make our lives more comfortable, I think it’s time to shed the heavy legacy of the phone switches, rocket launch systems, business machines and see how we can make at least one step closer towards the true home machines, intelligent assistants rather than calculators with HD screens. Think protocol droids.

Many of those outside of the Southern California, have the pain of dealing with such phenomenon as weather. Probably some of you share my frustration — the most exciting weather apps are still somewhat inferior to the forecast on the local radio. What is the value of the 3D rendering and flawless animation if I still don’t know which shoes should my daughter wear to school today? How much design and development time went into making some app look nice versus how it tells states it’s facts in human terms?

Who could fix that? Maybe an upcoming generation of the text developers and language designers? I’d like to see more job requirements such as: ‘Implementing syntactically perfect stories, entertaining users with personalized language and vocabulary, needs to be familiar with the main realization frameworks’. Just by reading this, it’s clear how far are we from making it a reality.

Why does it matter? Well, you might have heard about the Internet of Things, wearable computing etc. The funny thing is that many of the prototypes fail on the bare fact that they feature a most overused output device — a screen. Have you ever seen one of these fridges with WiFi and a touch screen?

If you rather prefer to listen to your gadgets, you don’t care about the margins and kerning anymore, you want the relevant information delivered in clear and simple sentences, maybe spoken in a pleasant voice. If it’s time to pay the electricity bill, I’d like to know how much power we have consumed in last 12 months and what can we specifically do to reduce the bill. If it’s an airport strike today, I want to know about it before I get to the waiting hall with 2000 other angry people. I am asking for intelligence and for eloquence.

We’ll probably have to develop the whole new way of designing, developing and testing such software. And let’s not forget the people who’ll build all that, it‘s naive to expect that the same teams who have been building products old way for years will be able to change their mindset. Brian Eno once said “If you want to make computers that really work, create a design team composed only of healthy, active women with lots else to do in their lives and give them carte blanche“. Maybe finally there’s an opportunity to apply his advice?

I would dare to speculate that that the most successful gadget of the next decade won’t even have a screen anymore. It also might be that it will take a generation of users to get used to actually read or hear the computers rather than scan and scroll the screens. But I believe that we’ll eventually get there and my daughter won’t even have to know what shoes her own daughter has to put on for school. The droid will take care of that.